This Site Runs on a Raspberry Pi in My Living Room

_

UPDATE 2023-03-07: I switched the Rust web framework from tide to axum.

Running a website on a Raspberry Pi isn’t anything new. There are dozens of tutorials on how to do it, and thousands of sites exactly like this.

What is hopefully new and worth saying are all of the tiny decisions I made to create the site from the ground up, and the values that guided those decisions.

This post breaks down how this little place on the internet works.

But first some background.

Why Do I Need a Personal Site?

I’ve been meaning to set up a personal site for ages, but haven’t ever found the time to get down to it.

So why now?

Honestly, I only now have something important enough to say.

During a year of occasional microblogging on Mastodon I’ve come up with almost two dozen ideas for posts, that can’t be squeezed into toots. Also, I wanted to finally become self-reliant with the technology I use, and especially never rely on corporations for reaching people.

What is the Site About?

When I decided to go ahead with the project, I wanted to do it without compromises. This is my site and it reflects what I think is important.

Principles

Borrowing and extending from the excellent small technology principles, the principles relevant for this site are:

Share alike. All source code is licensed with AGPLv3 and all text content with CC BY-NC-SA 4.0.

Private by default. No tracking and no storing nor logging of any personally identifiable information.

Non-commercial. There is no ulterior motive to make a profit. What you see is all there is.

Inclusive. Accessibility is not an afterthought[1]. Low-power devices need to be supported.

Ecological. Natural resources must not be wasted, and energy use must be low.

Functional Requirements

The site needs to have these features:

Three sections: about, work (coming later) and blog.

Easy for me to write new posts.

Possible to send a preview link of unfinished posts to friends.

Supports (nearly) all web browsers and Gemini clients.

Has an RSS/Atom feed.

There’s a statistics page that shows how many visits have recently been to which paths, and these statistics are backed up.

Only I can see 404 requests and server errors.

Eventually, I can use this site also for our family’s P2P app backups (more on this in future blog posts).

Cross-Functional Requirements

All of the above features should:

be cheap,

be fast,

be secure,

survive a small hug of death,

have low maintenance, and

have reasonably good availability.

Resources

Finally, I have these resources at my disposal:

I have a few weeks.

I can program.

I have a lot of motivation to learn Rust.

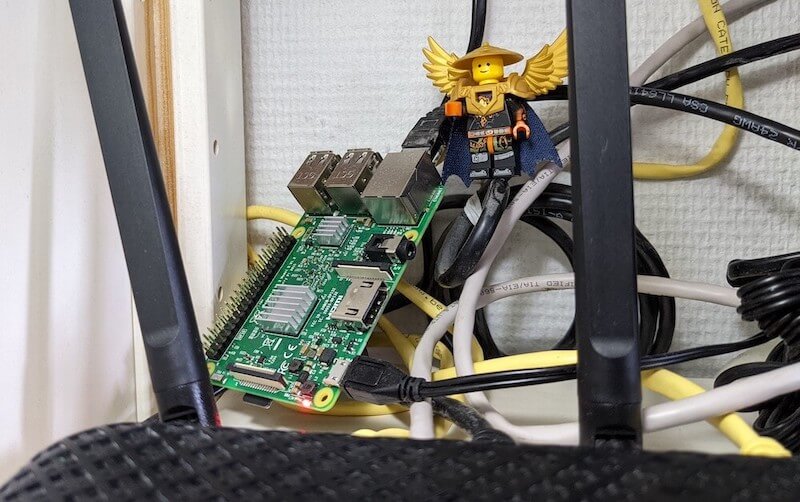

I own a Raspberry Pi 3B[2], that’s been sitting on a bookshelf since 2017.

Our apartment building has a very good internet connection.

I have a green email provider that gives me 2GB of storage.

The Part Where I Explain How It Works

With that out of the way, let’s get down to the nitty-gritty. If you’re not interested in the technical side of things you can skip to conclusions.

Disclaimer: All of my code referenced below is pre-alpha and not meant to be used by anyone except me. Especially proper error handling and tests are completely missing. I’m testing in production.

Hardware

As you should already know by now, I decided to use my Raspberry Pi 3 B for hosting. Because I’m a full-time activist, every euro I spend shortens the time I have left before I need to find income. That means that especially monthly recurring charges add up fast. Even a modest 5€ per month for a VM is not nothing for me. Also for environmental reasons, I try my best to avoid being the reason new hardware gets made.

Unfortunately, the Pi didn’t come with a charger, memory card or heat sinks, so I did have to buy them new. Together that cost me 53€.

OS

Because I only use the Pi as a server, a headless installation of Raspberry Pi OS Lite was the obvious choice. After that, I documented on Github[3] the steps needed to set up SSH, forward ports in my home router, and point Cloudflare’s DNS[4] to the IP address.

For reasons I’ll explain in a future blog post, I also needed a newer libc than was included in Debian stable ("bullseye"), which meant I had to upgrade to testing ("bookworm").

Because I only use the essentials from the OS, everything luckily works just fine.

To make sure I don’t miss out on security patches, I run a nightly OS upgrade that also reboots the server if that’s needed. I’m aware that this can cause the entire site to break horribly, which is why I set up an Uptime Robot monitor pinging the site so that I know when it broke. (And yes, I know a monitor can’t prevent downtime, keep on reading.)

Finally, I lowered the energy consumption of the Pi by turning off all unnecessary services. With everything turned off, the board should use between 1W and 3W, costing me under 2€ per year in electricity.

Web Content

I want to output both Gemtext and HTML from my blog posts. The obvious choice for the source format is Markdown but I ended up going with AsciiDoc because I will likely need the built-in bibliography support for some posts.

There was no Gemini converter for Asciidoctor.js so I wrote my own. While I was at it, I also wrote a converter to get JSON metadata out of adocs.

For CSS/JS I opted to go with SvelteKit with adapter-static. It has been an absolute delight. Unfortunately, I didn’t yet figure out a way to wire up Asciidoctor.js to SvelteKit, so I use a hacky pre-build script to generate svelte files[5], Gemtext files and OpenGraph images.

HTTP and Gemini Servers

For HTTP I decided to go with the axum web framework for Rust. Now I can almost hear some of you thinking I should have just installed nginx/acme.sh or [insert your favourite web server here] and be done with it, but bear with me. I had my reasons.

First, I have many other plans for the server than just serving static files (stay tuned for details in future posts). Second, I wanted an in-memory cache which is smart enough to inline CSS for cold loads, but not internal navigation (this has to do with how SvelteKit works). Third, I wanted to get better at Rust.

Because of my non-standard needs I had to write my own static file serving to be able to serve Svelte files, return the appropriate headers and inline CSS for blog posts.

ACME support came out of the box with rustls-acme and compression from tower-http. Finally, I made my own small HTTP to HTTPs redirect endpoint and implemented support for HSTS.

For Gemini, I chose the Agate server. It works as advertised, no complaints.

Statistics

Google Analytics is cancer and I’m very happy it’s looking like it will get outlawed in the EU. It’s a given I was not going to ever spy on you lovely people. But at the same time, I do want to know something about what’s going on with my site. Namely:

How many daily visits are there to my site and to what paths?

What requests are returning 404?

What are the biggest traffic sources?

This is a common problem and there are many industry standard solutions. But when I started looking into them, I realized they were all way overkill for my very modest needs. I’m not going to host a time series database. I’ll never need any fancy visualizations. I’m never going to analyze the data.

Because I also don’t want to depend on too many external libraries, I just decided to do it myself.

First, I do async access logging in a low priority thread into UTC date formatted log files, which is also good for performance.

The log files have space delimited lines, one per GET request, that contain just the path and the status code.

I don’t need log rotation, but I’ll probably need to implement deleting old log files later.

From this access log, I then update every minute a UTC daily metric file. The metric files are then served as JSON from an HTTP endpoint and rendered in a Svelte page.

If you want you can visit the live statistics page yourself.

As for traffic sources, I was initially planning on adding them, but after a little research, I realized that it’s easy to leak unwanted data in the Referer header.

This can happen for example if there is a link to my site from an internal forum and the forum accidentally has an invalid configuration.

Just because the mistake is not technically mine, I still don’t want to be responsible for storing the sensitive URL.

For that reason, for my last requirement, if there is a spike in traffic, I can just do a DuckDuckGo search for the URL and hopefully find the traffic source.

And if I can’t find the source, then I can’t. That’s fine.

Finally, I realized that because my public statistics page is rendering request paths if it showed 404 responses that would make it possible for someone to overwhelm the stats page or write nasty things for everyone to see.

I don’t want to start moderating any content on this site, which is why I added a simple secret query string I can use on the stats page to view the 404 requests.

Email Backups

The biggest thing missing from a Pi compared to a VM are backups. If the Pi dies all data is lost.

For the static site content, this is not a problem, because all sources are in git, but those metrics files aren’t. Also, I plan to store other personal data on the Pi at a later time so I need a backup solution.

I talked recently with Holger from Delta Chat and learned that there are many places in the world where international internet access costs more than national access. Nationally what almost certainly is available are email servers. Because I want to write inclusive software and keep my expenses low, I realized that I can just use email as storage. Email can’t realistically be used to back up pictures or videos, but for backing up text data, which is what I’m exclusively using it for, it’s plenty good enough.

To make this happen, I wrote a backup process that creates a tar.gz file from the metrics files.

It then encrypts the archive with rage using the same public key I use to SSH to the Pi, and lastly sends the archive with SMTP to myself.

On the email provider side, I have a rule which directs the backup email to a folder.

If I need to restore the content, I can decrypt the archive using the private SSH key, and unpack the content to a new Pi.

At some point, I’ll need to do some automatic cleaning of old backups, but for now, it works great.

DevOps

For me, the most stressful part about development is manual ops work. I just hate ssh’ing into a server and running ad hoc commands to get things to work. That’s why I feel the effort to create ops configuration into version control is always worth it, no matter how small the project.

I looked around for new DevOps tools but concluded that Ansible is still the best tool for me. I’m still not a fan of Ansible, but can now appreciate its relative simplicity more. So I wrote a few playbooks that GHA runs for me automatically when the right git push comes. This cost me maybe three work days, but I think it was worth it. I now have (maybe illusionary) peace of mind that if my Pi breaks, I can initialize a new one relatively fast.

Performance

By far the biggest reason websites are slow and waste energy is content bloat. Javascript bundles are huge, there’s unnecessary CSS, custom fonts, videos and unoptimized images. That’s why I decided that reading my blog post must be possible with just a TLS handshake followed by one HTTP/1.1 GET request.

Svelte is great in that if I don’t use javascript in some paths, there is also no javascript in the generated static files for those paths.

Because I’m expecting almost all of the visits to be to a single blog post, inlining CSS makes sense.

I don’t plan on making it a habit of using images in my blog posts, but decided to inline avif files as Base64 if they are small enough.

As a finishing touch, I use an empty image as a favicon[6].

With all of that out of the way, it was time to find out how fast I could get the server to work. Given that I had no previous experience with a Pi, and because I’m an idiot, I first implemented an in-memory cache against the file system. File IO is always the bottleneck, right? Wrong.

Turns out brotli compression on the Pi takes over half a second per request. For this reason, I changed the file system cache to a cache middleware for axum, so that I cache the entire compressed HTTP response.

Because that compression penalty was so high, I felt it had a significant negative impact on the site, which meant I didn’t want to cache only based on time-to-live, e.g. for five minutes. That’s why I now cache permanently and implemented a listener for a unix socket that does cache busting on demand, which I call with Ansible when the site updates. To add insult to injury, I whipped up a hacky brotli cache warm-up bash script that runs curl after cache busting. Now, most of the time no real visitor has to wait.

With all that in place, this page (including the image) is 48kb brotli-compressed, and when I’m physically near the Pi loads in 50ms.

Pretty cool if I say so myself!

Availability and Fault Tolerance

Finally a few words about the elephant in the room: availability.

I feel like the reason why many of these kinds of Raspberry Pi hacks have only been demos or semi-private websites is that developers value availability too high. I know I’ve spent months of my professional programming career working on redundancy, load balancers, auto-scaling and all that jazz to try to maximize uptime.

And sometimes prioritizing availability is the right call.

But for this site?

I’m just not that important.

If the site is down, it’s down. I hope to have many important things to say, but at the end of the day, I’m just one voice. If the people reading my posts don’t come back if the site is down, I’ve done something else wrong.

But what about DoS attacks?

Well, it would suck if someone did that. My Pi would suffocate and the site would go down. I’d have to try to rotate my IP, take the site offline, and hope my internet service provider doesn’t get angry with me.

I know the common practice for this is to just use e.g. Cloudflare’s DDoS services and hide my real IP, but that’s not honest nor sustainable. I can’t claim to be fighting for a democratic, post-capitalist internet and at the same time rely on freebies from corporations.

To put it in familiar terms: you might think you’re Han Solo hiding the Millennium Falcon by parking it on the star destroyer, but in reality, you’re the younger Han Solo leading the empire to the rebel base because you have a homing beacon on your ship.

Conclusion

It’s baffling how much computing power you can cram into a small board nowadays. It gets even more baffling when you compare that to what the software industry is selling as best practices.

Development grounded on moral principles and targeting low-power devices changes the development process in fascinating ways and opens so many avenues for inventions.

I urge every developer reading this to try. The result might just be a better, more inclusive and more democratic internet.

What do you stand for and how does that show in what you build?